WG2 – Transparent versus Black Box Decision-Support Models in the Financial Industry

Main Aims

Regulators need to ensure the transparency of rules and criteria used to judge the admissibility of decision algorithms employed by financial institutions, to avoid possible negative impact on the industry such as discrimination among market players. Thus, it is important that regulators and policy-makers have conceptual tools and research at their disposal to make quick and motivated decisions on how to regulate the use of data science techniques.

During this Action, working group 2 (WG2) will develop prototypes to demonstrate the application of quantitative methods to improve transparency for so-called “black box” models. The WG will also publish policy papers to suggest new regulation and guidelines for industry. Our objective is to lower, to the extent possible, the barriers to use more advanced methods.

In addition, our work will also address the issues of limited data and small-sample problems that arise in situations when the events of interest occur infrequently (e.g., defaults, fraud, etc.), providing solutions that will augment existing methods used in the financial industry. Furthermore, the WG will employ methods drawn from econometrics and statistics to transparently quantify and, to the extent possible, alleviate the impact of this problem on inference and prediction for financial decision making.

Objectives

- The development of conceptual and methodological tools for establishing when black-box models are admissible and, to the extent possible, making them more transparent and/or replacing them with interpretable and explainable models:

- Classification of algorithms from a range of disciplinary domains (especially ML, Econometrics) according to the predictability of the variables being modelled/forecast,

- Identification of methods for mapping results of black-box models to explainable and interpretable ones, at least ex-post,

- Better understanding of the conceptual and empirical nexus between identification of causality within models and the interpretability/explainability of the models.

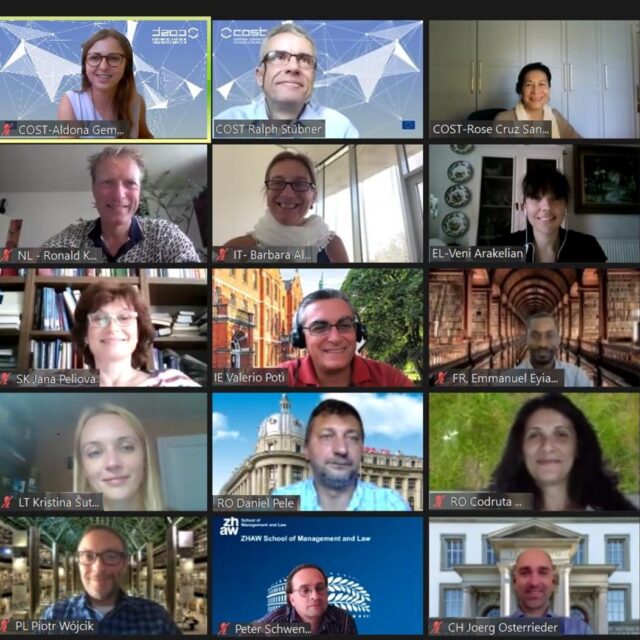

- Establishing working relationships with regulators and practitioners’ communities, to receive essential input on how to pursue the previous objective and to share the results of the investigation.

Tasks

- Review the existing literature on AI (including machine learning) approaches as they are used in the finance industry and identify the most important applications.

- Develop prototypes to demonstrate quantitative methods to improve transparency (including explainability and interpretability) of “black-box” models and/or to provide alternatives.

- Interact with stakeholders, in particular regulators, to raise awareness of the research questions and discuss potential solutions.

- Develop a roadmap for including the results in European regulation and policies, in cooperation with regulators.

- Publish policy papers to suggest new regulation.

- Development of a handbook or wiki page describing the prototypes above.

Contacts

- Prof. Dr. Petre Lameski PhD (WG2 Leader)

- Assistant Professor and Vice-Dean for Academic Affairs

- University of Ss. Cyril and Methodius in Skopje,

- Faculty of Computer Science and Engineering

- Ruger Boskovik 16, PO Box 393, 1000, Skopje, N. Macedonia

- Email: lameski@finki.ukim.mk

- Dr. Kristina Sutiene

- Associate Professor

- Kaunas University of Technology

- Faculty of Mathematics and Natural Sciences

- Studentų 50-144, LT-51368 Kaunas, Lithuania

- Email: kristina.sutiene@ktu.lt

- Dr. Emmanuel Eyiah-Donkor

- Assistant Professor of Finance

- Rennes School of Business

- 35065 Rennes

- France

- Email: emmanuel.eyiah-donkor@rennes-sb.com