WG3 – Transparency into Investment Product Performance for Clients

From overfitting to underperformance

There are very few active investment products that outperform their passive benchmarks consistently after fees. Even “smart beta” strategies offered by asset managers have systematically underperformed. An empirical analysis of Suhonen et al. (2016) showed that systematic asset managers tend to overfit their investment strategies during the development phase, resulting in significant underperformance after go-live. Lopez de Prado and Lewis (2018) attribute this effect to a “proliferation of false discoveries” about sources of investment performance and warn about the resulting risks. While there are academic publications proposing methods to quantify the overfitting problem, these methods require data about “failed trials” that are only available to the product vendors. Actively managed investment funds that have underperformed relative to their passive benchmarks continue to suffer from outflows of Assets under Management and the growing popularity of cheaper ETFs. Not surprisingly, there are active investment managers who warn that this trend could reduce market efficiency.

The need to uncover failed trials

Bailey and Lopez de Prado (2014), Bailey et al. (2015), and Lopez de Prado and Lewis (2018) analyzed tools to address overfitting. However, the “failed trials” produced during the development process are known only to those involved in it, which makes said tools inapplicable.

In contrast to the product development process in the pharmaceutical industry, in the investment industry there is no regulation on how to set up a backtest. Such a framework would require the choice of an appropriate benchmark, the determination of the underlying data, suitable tests for parameter sensitivity, and a clear procedure on how to treat failed trials.

The goal of this WG is thus to develop consistent and reliable methods, together with the necessary synthetic scenarios, for choosing investment products ex-ante and evaluating their performance ex-post.

Progress beyond the state-of-the-art

First, this WG will address the data availability challenge. The WG will collect time series data on investment products, their underlying assets and relevant market conditions (risk factors). Some of the data will be directly collected from exchanges and websites and it will be possible to freely exchange it within the network. Other parts of the data will be protected by IP from data vendors. To mitigate this problem, the WG will draw from methods developed by WG2 for generating synthetic financial scenarios and contribute to their development. To connect the resulting strategy performance to features of the generated scenarios, interpretable machine learning concepts will be used.

Moreover, the inaccessibility of “failed trials” during the backtesting process implies that it will not be possible to apply the methods from the aforementioned literature . One way to address this deficiency will be to simulate the development process of rule-based financial products by using generic versions of published factors and then to tweak these implementations until the performance characteristics of published backtested time series of actual investment products are matched. The WG will work to automatize this “tweaking” process with machine learning approaches. These artificially generated “failed trials” will serve as input to use the referenced methodologies in order to quantify any “overfitting bias”.

Objectives

The main objectives of the working group are:

- Pruning and improvement of performance attribution models by contributing to the development of methodologies like synthetic scenario generation and interpretable machine learning for reducing the false discovery rate in financial research and applied financial investment management (long-term scientific impact).

- Disseminating the results on the previous objective, through presentations at public conferences and an active contact with European regulators and the financial industry.

- Creation of the first European platform comparing the out-of-sample performance of investment products, insurance-linked investment products and asset management products available to the general public (industry impact).

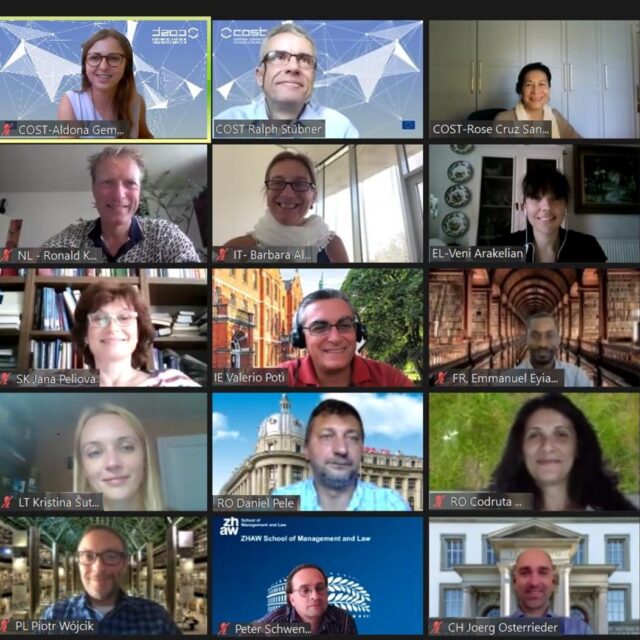

WG3 Leader

- Peter Schwendner

- Institute of Wealth & Asset Management

- Zurich University of Applied Sciences

- Technoparkstrasse 2, Postfach

- CH-8401 Winterthur

- Contact: https://www.zhaw.ch/en/about-us/person/scwp/